In multi-agent reinforcement learning, you observe how agents learn to work together, compete, and sometimes create chaos in shared environments. Cooperation is driven by shared goals or incentives, while competition often emerges from individual rewards. Small changes in rules or strategies can lead to unpredictable, complex behaviors. Understanding these dynamics helps you see how simple interactions create powerful emergent phenomena. Keep exploring, and you’ll uncover how to manage and harness these behaviors effectively.

Key Takeaways

- Multi-agent RL involves simultaneous decision-making by agents, where coordination is essential to achieve shared goals.

- Emergent behaviors can arise unpredictably from simple local interactions, leading to cooperation, competition, or chaos.

- Proper incentive design encourages cooperation and stability, while misaligned rewards can cause destructive competition.

- Balancing exploration and exploitation, along with environment tuning, is crucial to manage complex agent interactions.

- Understanding and controlling emergent behaviors is key to developing stable, efficient multi-agent systems.

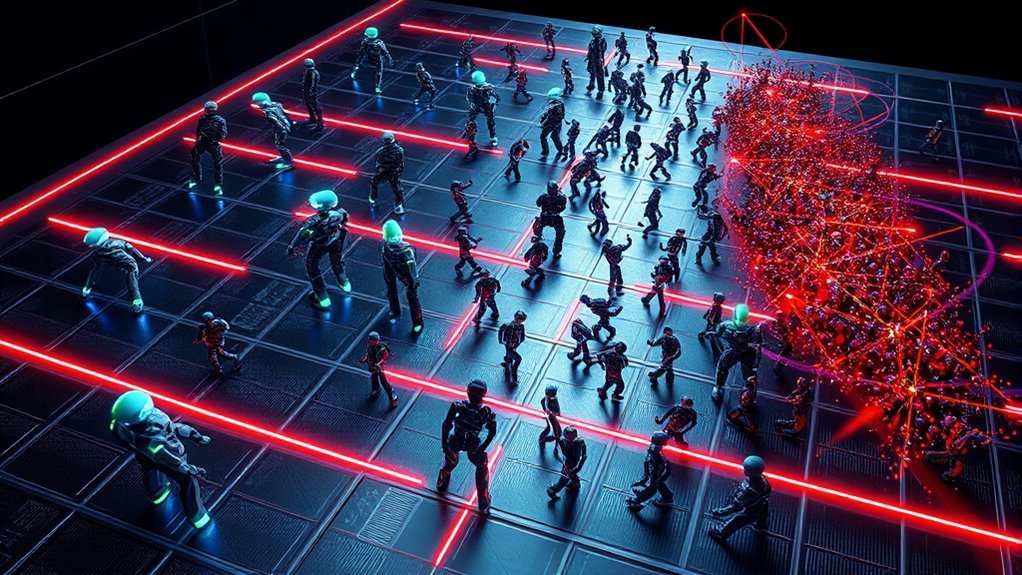

Multi-Agent Reinforcement Learning (MARL) involves multiple agents learning and making decisions simultaneously within a shared environment. As you engage with MARL, you’ll notice that a key challenge is agent coordination—how each agent aligns its actions with others to achieve common goals or optimize overall performance. When agents work together effectively, they can display sophisticated emergent behaviors that weren’t explicitly programmed but arise naturally from their interactions. These behaviors can lead to impressive cooperation, such as robots collaborating to carry objects or autonomous vehicles coordinating to navigate traffic smoothly. However, understanding and harnessing these emergent behaviors requires careful design. You need to facilitate that agents have incentives to cooperate rather than compete destructively, which can be tricky in complex environments where individual rewards may conflict with collective goals.

Effective MARL relies on designing incentives to promote cooperation over competition.

In MARL, emergent behaviors often surprise even the most experienced researchers. They arise when simple local rules or learning algorithms interact in unpredictable ways, producing complex global phenomena. You might observe agents forming alliances, competing fiercely for resources, or developing communication protocols without explicit instructions. Such behaviors demonstrate the power and unpredictability of multi-agent systems, but they also pose challenges. For example, if agents prioritize their own success over group objectives, chaos can ensue—leading to unstable or inefficient outcomes. As you work with these systems, you’ll need to balance exploration and exploitation, fostering cooperation while preventing free-riding or malicious strategies that can undermine the entire system. Additionally, the wave and wind influence on environments can create dynamic conditions that complicate agent decision-making and coordination.

The dynamics of agent coordination play a pivotal role in shaping the system’s stability and efficiency. You’ll find that the design of reward structures and learning algorithms greatly influences whether agents learn to cooperate or compete. In some cases, you might implement shared rewards to promote collective success; in others, individual rewards can encourage competition, which might push agents to outperform each other at the expense of overall harmony. Striking the right balance is essential for fostering beneficial emergent behaviors without spiraling into chaos. During training, you’ll observe how small changes in environment parameters or agent strategies lead to drastically different patterns of behavior, emphasizing the importance of careful tuning.

Ultimately, mastering agent coordination and understanding emergent behaviors are essential for harnessing the full potential of MARL. You’re working in a domain where simple rules can produce extraordinary outcomes, but missteps can lead to instability or unintended consequences. As you develop your multi-agent systems, keep in mind that fostering cooperation, managing competition, and mitigating chaos are ongoing challenges that require innovation, insight, and patience. With the right approach, you’ll unleash powerful, adaptive systems capable of tackling complex real-world problems through collective intelligence.

Frequently Asked Questions

How Do Multi-Agent Systems Handle Communication Failures?

When communication failures happen, your multi-agent system relies on communication resilience and fault tolerance to keep functioning. You design it so agents can detect issues, switch to backup channels, or operate autonomously when needed. This way, your system maintains coordination and performance despite disruptions. By building in redundancy and adaptive strategies, you guarantee agents continue working effectively, even in unreliable environments, minimizing the impact of communication failures.

What Are the Ethical Considerations in Multi-Agent Reinforcement Learning?

You should consider the ethical dilemmas in multi-agent reinforcement learning, as these systems can influence societal impact considerably. When designing these agents, you need to guarantee they align with ethical standards, avoid bias, and promote fairness. Ignoring these considerations could lead to unintended harm or misuse. By proactively addressing ethical issues, you help create responsible AI that benefits society while minimizing risks and fostering trust in automated decision-making processes.

How Scalable Are Current Multi-Agent RL Algorithms?

You’ll find that current multi-agent RL algorithms face significant scalability challenges, especially as the number of agents increases. Agent coordination becomes more complex, making it harder to guarantee efficient learning and performance. While some algorithms improve scalability through techniques like decentralized training, you still encounter limitations in large, dynamic environments. Overall, scalability remains an ongoing challenge, requiring innovative solutions to handle more agents effectively.

Can Multi-Agent RL Be Applied to Real-Time Decision-Making?

You can apply multi-agent RL to real-time decision-making by leveraging distributed coordination to manage multiple agents efficiently. While adversarial strategies pose challenges, advancements in algorithms help you handle such complexities. Real-time scenarios demand quick, adaptive responses, and current multi-agent RL methods are increasingly capable of meeting these needs. With ongoing improvements, you’re better equipped to deploy multi-agent RL in dynamic environments requiring instant decisions.

How Do Agents Adapt to Dynamic Environments in Multi-Agent Settings?

Think of agents as sailors steering stormy seas; they adapt to environment dynamics by constantly updating their strategies. In multi-agent settings, you need to design systems where agents observe changes, predict future states, and adjust actions accordingly. Agent adaptation involves learning from interactions, sharing information, and maintaining flexibility. This guarantees they stay afloat amid shifting circumstances, making your multi-agent system resilient and capable of thriving in unpredictable, dynamic environments.

Conclusion

So, here you are, marveling at how multi-agent RL turns chaos into cooperation—until the next bug, buggered by competition, or simply chaos reigns. It’s almost poetic how these agents mimic human folly, isn’t it? Perhaps, in their quest to emulate us, they’ll teach us a thing or two about collaboration—just don’t hold your breath. After all, in the grand scheme, isn’t chaos just the universe’s way of keeping things interesting?