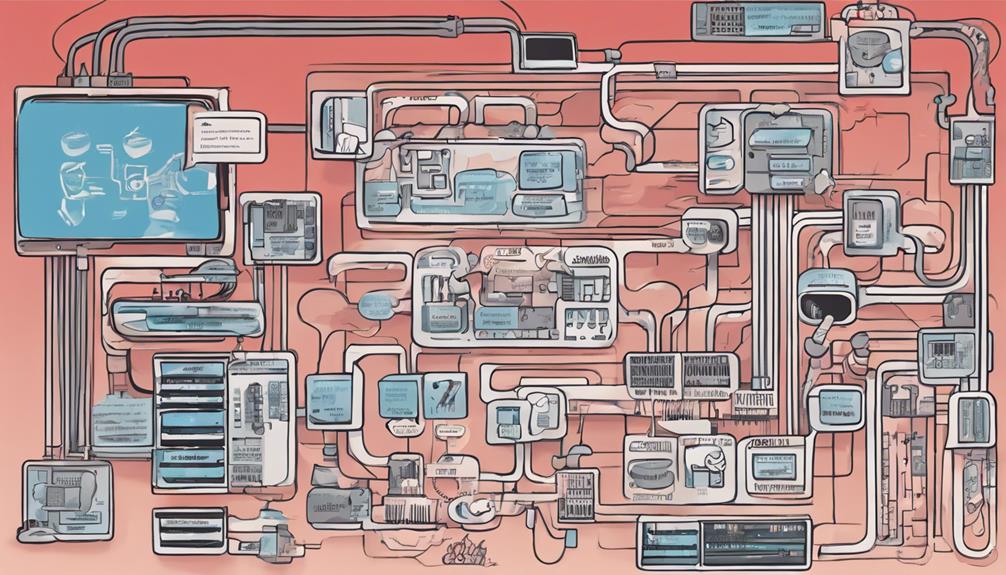

Explore Kubernetes architecture through its core components: kube-apiserver, kube-scheduler, kube-controller-manager, cloud-controller-manager, and etcd for data storage. The control plane manages critical operations with components like kube-apiserver, etcd, and kube-scheduler. Worker nodes rely on kubelet, kube-proxy, container runtime, and pods for smooth container management. Container networking is essential for pod connectivity, handled by CNI, unique IP addresses, and networking plugins like Flannel or Calico. Uncover more about the API server, scheduler responsibilities, controller manager role, and cloud controller manager functions to *dig* deeper into Kubernetes functionality and roles.

Key Takeaways

- Core Components: kube-apiserver, kube-scheduler, kube-controller-manager, cloud-controller-manager, etcd for critical data storage.

- Control Plane Components: kube-apiserver, etcd, kube-scheduler, cloud-controller-manager for cluster management.

- Worker Node Components: kubelet, kube-proxy, container runtime, pods for container management.

- Container Networking: CNI for pod networking, unique IP addresses, plugins like Flannel, Calico, Weave Net.

- Functionality: API server for central management, scheduler for workload optimization, controller manager for orchestration.

Core Components Overview

Explore the main components that form the foundation of Kubernetes architecture.

The Kubernetes core components consist of the kube-apiserver, which serves as the front end for Kubernetes, handling API requests and acting as the entry point for the control plane.

The kube-scheduler plays a pivotal role in decision-making by determining where to deploy pods based on the cluster's resource requirements and constraints.

Additionally, the kube-controller-manager oversees various controllers responsible for maintaining the cluster's desired state.

Another important component is the cloud-controller-manager, which interacts with the cloud provider's API to manage the underlying infrastructure.

Finally, the distributed key-value store, etcd, ensures data consistency across the cluster by storing critical configuration data.

Understanding these core components is essential to comprehending how Kubernetes manages containerized applications efficiently.

Control Plane Components

The Control Plane Components in Kubernetes form the backbone of cluster management, comprising essential elements like kube-apiserver, etcd, kube-scheduler, kube-controller-manager, and cloud-controller-manager.

Key Points:

- kube-apiserver: This component acts as the front end for the Kubernetes control plane, offering the API through which cluster operations are executed.

- etcd: As a distributed key-value store, etcd plays an important role in storing cluster configuration data and maintaining consistency across the cluster.

- kube-scheduler: Responsible for optimal pod placement, the kube-scheduler makes decisions on where to run pods based on factors such as resource requirements and constraints.

These Control Components work in harmony to ensure the smooth functioning of the Kubernetes cluster, with the cloud-controller-manager facilitating seamless integration with the cloud provider's API.

Each component plays a crucial role in managing and orchestrating the cluster's resources effectively.

Worker Node Components

Moving on from the Control Plane Components, let's now focus on the Worker Node Components in Kubernetes. Worker nodes are where containers are deployed and managed by the Kubernetes master.

Key components on a worker node include:

- kubelet: manages containers and ensures their well-being.

- kube-proxy: responsible for network connectivity for containers.

- container runtime engine.

- pods: the basic deployment units in Kubernetes that run containers.

The kubelet is essential for overseeing containers on the node, making sure they're running as expected and maintaining their health.

Kube-proxy plays a crucial role in managing network connectivity to enable communication between containers on the worker node and with other parts of the cluster.

The container runtime engine handles the execution of containers, while pods encapsulate one or more containers along with shared storage and networking resources, forming the fundamental scheduling and execution unit on worker nodes in Kubernetes.

Container Networking Details

Understanding the intricacies of container networking in Kubernetes is vital for ensuring seamless communication between pods and containers within the cluster.

Here are some key points to help you grasp Kubernetes networking:

- Container Network Interface (CNI): CNI plugins are responsible for configuring networking for each pod, enabling efficient communication within the cluster.

- Unique IP Addresses: Every pod in Kubernetes is assigned a unique IP address, allowing for individualized network connectivity.

- Networking Plugins: Solutions like Flannel, Calico, and Weave Net play an essential role in managing pod connectivity, ensuring efficient data transfer and communication between containers.

API Server Functionality

The API Server in Kubernetes handles all incoming and outgoing requests, serving as the primary interface for cluster management. Its functionality encompasses essential operations like validation, authentication, and admission control, ensuring secure and efficient interactions.

Scalability is achieved by deploying multiple instances, optimizing load distribution and enhancing system availability.

API Server Operations

API Server Operations within Kubernetes architecture are important for managing resources securely and efficiently. Here are key aspects of API server functionality:

- Central Management Point:

The API server serves as the central management point for all operations in the cluster, acting as the entry point for interacting with the Kubernetes control plane.

- Authentication and Authorization:

The API server handles authentication and authorization processes, ensuring that only authorized users can access and modify resources within the cluster.

- Validation and Admission Control:

API server operations include validation and admission control mechanisms to verify and accept or reject requests based on predefined policies, contributing to maintaining the cluster's desired state.

API Server Scalability

Achieving scalability within Kubernetes architecture involves deploying multiple instances of the API server to efficiently handle increasing workloads and balance traffic. The API server in Kubernetes plays a crucial role in managing cluster resources through the Kubernetes API, acting as the front end for the control plane. It efficiently handles both external and internal requests, validating and processing them to ensure smooth communication within the cluster. By running multiple instances of the API server, horizontal scaling becomes possible, allowing the system to adapt to the demands of a growing Kubernetes cluster.

| API Server Scalability | |

|---|---|

| Key Points | Description |

| Scalability | Deploying multiple instances to handle increased workloads. |

| Kubernetes API | Exposes API for managing cluster resources. |

| Control Plane | Acts as the front end for the control plane. |

| External Requests | Handles external requests efficiently. |

| Internal Requests | Processes internal requests within the cluster. |

API Server Front-end

To effectively manage interactions within the Kubernetes cluster, the API server front-end facilitates communication by validating and processing requests from both external and internal sources.

Here are three key points about the API server front-end functionality:

- Interface for Users: The API server serves as the primary interface for users to interact with the Kubernetes cluster. Users can issue commands through tools like kubectl or make REST calls to communicate with the cluster effectively.

- Validation and Processing: One of the core functions of the API server is to validate and process incoming requests. By ensuring that requests meet the necessary criteria, the API server helps maintain the integrity and security of the cluster.

- Load Balancing and Redundancy: In larger Kubernetes deployments, multiple instances of the API server can be deployed for load balancing and redundancy purposes. This scalability feature enhances the cluster's performance and resilience to failures.

Scheduler Responsibilities

Now, let's talk about the essential responsibilities of the scheduler in Kubernetes.

It manages the placement of pods on worker nodes by considering factors like data locality, affinity, and resource availability.

The scheduler's main aim is to optimize workload distribution and enhance resource utilization within the cluster.

Pod Scheduling Workflow

The Kubernetes scheduler efficiently assigns pods to nodes based on resource needs and constraints. When it comes to pod scheduling workflow, the scheduler's responsibilities are essential for maintaining an optimized cluster environment.

Here's what you need to know:

- Resource Allocation: The scheduler considers the resource requirements of pods to make sure they're placed on nodes that can meet their demands without causing resource contention.

- Data Locality and Affinity: By understanding data locality and affinity requirements, the scheduler makes intelligent decisions to place pods close to the data they need or alongside other related services for improved performance.

- Workload Distribution: Through workload distribution, the scheduler aims to evenly spread pods across available nodes, preventing any single node from becoming overwhelmed while maximizing resource utilization and cluster efficiency.

Factors for Scheduling

The Kubernetes scheduler's responsibilities include considering various factors such as resource requirements, data locality, and affinity/anti-affinity rules when making pod scheduling decisions.

By analyzing pod specifications, node capacity, and cluster constraints, the Kubernetes scheduler guarantees efficient distribution of workloads across available nodes in the cluster.

It automates the process of assigning pods to suitable nodes based on defined criteria and policies set by administrators.

Through intelligent scheduling, the Kubernetes scheduler optimizes resource utilization, thereby enhancing the performance, fault tolerance, and scalability of applications within the cluster.

By taking into account affinity rules, anti-affinity rules, and other parameters, the scheduler plays an essential role in orchestrating the placement of pods within the Kubernetes cluster.

This meticulous approach to scheduling helps in maintaining a balanced and effective deployment environment for your applications.

Optimization of Workloads

How does the Kubernetes scheduler enhance the distribution of workloads across the cluster?

The scheduler in Kubernetes plays an essential role in guaranteeing efficient workload distribution and resource allocation within the Kubernetes environment. Here's how it achieves this:

- Automated Workload Placement: The scheduler automates the process of selecting suitable nodes for new pods based on factors like resource requirements, constraints, and other scheduling considerations. This automation improves resource utilization and performance by placing workloads effectively across the cluster.

- Efficient Resource Allocation: By considering data locality, deadlines, and other relevant scheduling factors, the scheduler makes informed decisions to distribute workloads optimally. This optimization of resource allocation helps in maintaining the desired state of the cluster and ensuring effective utilization of resources.

- Orchestration of Application Deployment: The scheduler plays a critical role in orchestrating the deployment of applications by determining the best nodes for workload placement. This orchestration ensures that applications run smoothly and effectively within the Kubernetes environment.

Kubernetes Controller Manager Role

Responsible for managing controllers that regulate the state of the cluster, the Kubernetes Controller Manager plays an important role in overseeing various components within the architecture. It guarantees the proper functioning of controllers like the Node Controller and Replication Controller, maintaining the desired state of the cluster.

By monitoring components and controlling activities, the Controller Manager automates tasks such as pod replication, endpoints, and namespace creation. This vital component orchestrates and coordinates activities to achieve specified configurations and functionalities in the cluster efficiently.

The Controller Manager's role in pod replication is particularly notable, as it's responsible for ensuring the correct number of pods are running at all times, thereby contributing to the stability and scalability of the Kubernetes environment.

Through its capabilities, the Kubernetes Controller Manager greatly enhances the management and operational efficiency of the cluster.

Cloud Controller Manager Functions

To seamlessly integrate Kubernetes clusters with various cloud providers, the Cloud Controller Manager functions by embedding cloud-specific control logic within the Kubernetes control plane. This enables the interaction with cloud provider APIs, managing resources and services on the chosen cloud platform efficiently.

Here are three key functions of the Cloud Controller Manager:

- Decoupling Kubernetes Clusters: By running cloud provider-specific controllers, the Cloud Controller Manager helps decouple Kubernetes clusters from cloud platform specifics, enhancing scalability and resilience.

- Streamlining Control Loops: It combines logically independent control loops into a single binary, streamlining cloud-specific functionalities within Kubernetes for improved efficiency.

- Facilitating Connectivity: The primary function of the Cloud Controller Manager is to facilitate connectivity between Kubernetes clusters and various cloud providers, ensuring seamless integration and smooth operation.

Frequently Asked Questions

What Are the Components in Kubernetes Architecture?

In Kubernetes architecture, components include the control plane with kube-API server, kube-controller-manager, kube-scheduler, and etcd as the key-value store. Worker nodes run apps with kubelet, kube-proxy, runtime, and pods. Cloud-controller-manager aids cloud integration.

What Is Kubernetes Based Architecture?

In Kubernetes, the architecture is structured around a master-slave model. The master node manages control plane components, while worker nodes run application workloads. This setup emphasizes scalability, fault tolerance, and automation for efficient containerized workload deployment and management.

What Are the Components of Kubernetes Network?

In Kubernetes, the components of the network include a virtual network model assigning unique IP addresses to pods. CNI plugins like Flannel and Calico manage pod-to-pod communication, ensuring connectivity. Pods communicate via these IPs for network operations.

What Are Three of the Components of a Kubernetes Master Machine?

Discussing the question "what are three of the components of a Kubernetes master machine?" The main components on a Kubernetes master machine are kube-apiserver, kube-scheduler, and kube-controller-manager. They collaborate for efficient cluster management.

Can you Explain the Main Components of Kubernetes Architecture?

Kubernetes architecture components explained consist of nodes, pods, and clusters. Nodes are the individual machines that run containerized applications. Pods are a group of one or more containers deployed together on a node. Clusters are a set of nodes that manage the workload and ensure high availability and scalability.

Conclusion

Now that you've explored the main components of Kubernetes architecture, think of it like a symphony orchestra. The control plane components are the conductors, guiding the worker node components to play harmoniously together.

The API server is the sheet music, providing instructions for the performance. Just like a well-orchestrated concert, Kubernetes architecture guarantees that all elements work seamlessly to create a beautiful and efficient system.

Keep exploring and conducting your own Kubernetes symphony!